If you find the title a tad bit cheesy and link-baitish, in my defence I would say that this is really exciting stuff. Stuff with time travel and cats! OK, maybe one cat. Who is also, probably, not relevant to the plot.

In a recent discussion on algorithm run-times, I seemed to faintly recall how you could solve NP complete problems if you could do time-travel. Yes, time travel. Sure enough, some amount of Googling later, I found the relevant arguments by Hans Moravec. Here is an article by him. He also discusses this in his book - Robot: Mere Machine to Transcendent Mind ; unfortunately, I could not find an affordable copy. I present the arguments from a small portion of the article in this post.

Here's a high-level flow of what we're going to talk about:

Consider a negative delay that conveys the signal back to the instant \(t_1\). In case of the NOT circuit, you might see yourself reasoning in this manner: "1 goes in, 0 comes out, which goes into the past implying that 1 hadn't gone in, 0 had. So 1 should come out. Which, alas, goes into the past and becomes the original input, and so 0 indeed should be the output ...".

Clearly we need a diagram for the sake of sanity.

The following figure shows the timeline in the spirit of the previous diagrams. But the negative delay causes interesting differences. We again start monitoring our circuit at \(t_1\) and start with a 1/green signal (iteration 1 in the diagram). Till the gate is done inverting the signal i.e. in the interval from \(t_1\) to \(t_1+\Delta t\), we see the 1 signal in the wire - represented by the green rectangle. At \(t_1+\Delta t\), we measure a 0 - red circle - and this signal feeds back into the gate as the new input in the past i.e. as if 0 was the the input to begin with. The diagram shows the input arc stretching back to just around \(t_1\).

But we are going to assume a few things; we are going to assume is the measurement shown by the device is an aggregate property of the stuff that constitutes the signal over its different iterations. So individual entities in this stuff can be either in a state of 0 or 1, and the device reports a value that's a average of these values. If a lot of these entities are at a value 0, you read a value close to 0. If 50% of them are at a value 0 and the remaining 50% are at 1, you read 0.5 off the device.

In our case, when the device tries to probe each of these entities to see if they are a 0 or 1, the afore-mentioned quantum collapse occurs. Some entities manifest as a 0, some as 1. In what ratio? Given the circuit setup, we have no reason to believe one state is produced more than the other - so we have an exact ratio of 50:50. And the device measures 0.5.

You good? You aren't looking at me like this right now?

Here's the million dollar question - what would the device show? We measured 0.5 in the previous case; what would it read now?

Remember we assumed that the device reports the average of everything it sees. Here, it sees a bunch of different values of \(x_i\) to begin with, but once we hit \(x^*\), we only get this value from that point onward; we see an infinitude of \(x^*\) values. What is the average of a set of numbers that has an infinite number \(x^*\), and a finite number of other values? Yes, the device would measure \(x^*\).

Take a while to think about what we've achieved. We start the circuit at \(t_1\) with some arbitrary \(x_0\) as input, and \(\Delta t\) time later the device coolly reads out the final answer \(x^*\). All the iterations that we are supposed to wait for ... are gone. Poof! The iterations are hidden from our sense of time, thanks to our hypothetical ability to travel in time.

Whether a measuring device can do justice here depends on the implementation. In the specific case of the problem that we see in the next section, we will, fortunately, have a way to recognize these "no-answer" results.

The hallmark of NPC problems are that there is no known fast algorithm to solve them. If there are are \(K\) candidate solutions to a problem, then you would need to enumerate each of these \(K\) possible solutions to identify the best - no shortcuts. In fact, efficient solutions have so notoriously resisted discovery that there is a million dollar prize for anyone who finds one. I wasn't kidding when I said we are going to solve hard problems.

As an aside, another interesting characteristic of NPC problems are that if you know how to efficiently solve one particular NPC problem, you can adapt that solution to solve any NPC problem. I mention this now since although we are going to pick up one NPC problem for illustration, let's be aware that all NPC problems are taken care of.

A popular example of a NPC problem is the Travelling Salesman Problem (TSP). A salesman is to visit each of \(n\) cities, starting at a particular city, and come back to the starting city; and he must pick a route that is the shortest. Fig 8 shows 4 cities A,B, C and D, and the distances between them.

In a recent discussion on algorithm run-times, I seemed to faintly recall how you could solve NP complete problems if you could do time-travel. Yes, time travel. Sure enough, some amount of Googling later, I found the relevant arguments by Hans Moravec. Here is an article by him. He also discusses this in his book - Robot: Mere Machine to Transcendent Mind ; unfortunately, I could not find an affordable copy. I present the arguments from a small portion of the article in this post.

Here's a high-level flow of what we're going to talk about:

- We discuss interesting modifications you can make to certain simple circuits if you could travel in time.

- Then we see how those modifications can be used to solve certain kinds of problems.

- And then we build on those ideas to solve some super tough algorithmic problems.

Circuits with feed back loops

Logic gates take as inputs binary signals - each a 0 or a 1 - and produce a binary output. We are interested in the

Both these gates take in only one input. The following figure shows how they are represented - the fat end is the input and the pointed end is the output. The NOT gate looks like an amplifier with a, um, snot-nose.

There is a time-delay between the consuming of an input and producing the output (typically in nanoseconds). We will denote this by \(\Delta t\).

Consider what would happen if, after sending in an initial input signal, we immediately connect the output to the input and hold that configuration. This arrangement is known as a feedback loop. This is shown below for the NOT gate; the amplifier circuit looks similar. Note the device labelled "measure" - we're interested in measuring output at this point in the circuit.

The case for the amplifier seems pretty non-controversial: if we started with an input of 1, the output would be 1, which again feeds in, again becomes the output, and so on. All 1s. No problem.

The case for the NOT gate does not seem so simple. Say, we start with a signal of value 1 i.e. the first input to the gate is a 1. This is transformed into a 0 after \(\Delta t\). This 0 immediately becomes the new input (we assume no delays in the wire, the only delay is in the gate), and another \(\Delta t\) later 1 is the new output. Which then becomes the new input leading to an output of 0 another \(\Delta t\) later. It looks like after every duration equaling \(\Delta t\), the output switches state i.e. it alternates between 1 and 0, starting with a 0. How rapidly this switching happens depends on the time delay - smaller values of \(\Delta t\) lead to faster switching.

This is illustrated below. We start observing our feedback circuit at time \(t_1\). The readings obtained by the measuring device are shown as solid circles, green for 1 and red for 0. Intervals of length \(\Delta t\) are marked. The signal value in the wire between these intervals of measurement is also shown - as adjacent colored rectangles under the time axis. After each duration of length \(\Delta t\), the output becomes the new input immediately - this is shown by small tight loops with the appropriate color.

The timeline for the amplifier holds no surprises. For the NOT gate, we see a green rectangle giving way to a red circle and a red loop; denoting inversion of the signal, and this inverted signal becoming the new input. This alternation goes on because of how the circuit is built i.e. the alternation exists by construction.

What would you measure if the speed of switching becomes infinitely fast? 0? 1? Or something midway like 0.5?

- amplifier (or buffer), which does not change the input signal, and

- the inverter or the NOT gate which inverts the input.

Both these gates take in only one input. The following figure shows how they are represented - the fat end is the input and the pointed end is the output. The NOT gate looks like an amplifier with a, um, snot-nose.

|

| Fig 1. The amplifier and NOT gates |

There is a time-delay between the consuming of an input and producing the output (typically in nanoseconds). We will denote this by \(\Delta t\).

Consider what would happen if, after sending in an initial input signal, we immediately connect the output to the input and hold that configuration. This arrangement is known as a feedback loop. This is shown below for the NOT gate; the amplifier circuit looks similar. Note the device labelled "measure" - we're interested in measuring output at this point in the circuit.

|

| Fig 2. A feedback loop with the NOT gate |

The case for the NOT gate does not seem so simple. Say, we start with a signal of value 1 i.e. the first input to the gate is a 1. This is transformed into a 0 after \(\Delta t\). This 0 immediately becomes the new input (we assume no delays in the wire, the only delay is in the gate), and another \(\Delta t\) later 1 is the new output. Which then becomes the new input leading to an output of 0 another \(\Delta t\) later. It looks like after every duration equaling \(\Delta t\), the output switches state i.e. it alternates between 1 and 0, starting with a 0. How rapidly this switching happens depends on the time delay - smaller values of \(\Delta t\) lead to faster switching.

This is illustrated below. We start observing our feedback circuit at time \(t_1\). The readings obtained by the measuring device are shown as solid circles, green for 1 and red for 0. Intervals of length \(\Delta t\) are marked. The signal value in the wire between these intervals of measurement is also shown - as adjacent colored rectangles under the time axis. After each duration of length \(\Delta t\), the output becomes the new input immediately - this is shown by small tight loops with the appropriate color.

|

| Fig 3. Behavior with positive time delay |

What would you measure if the speed of switching becomes infinitely fast? 0? 1? Or something midway like 0.5?

Time travel

Before we answer that question, let's first ask how do you make the switching infinitely fast. And I am not using the term "infinitely" merely as a metaphor.

One way is time-travel.Which, of course, is as easy as taking a stroll in the park. If you could send signals into the past, you could add a negative time delay unit in the circuit (reminds me of reannual plants. Terry Pratchett anyone?). The role of this unit is simple on paper - carry its input unchanged to a time in the past.

With a unit like this plugged into the circuit, as shown in the following figure, what are the implications?

One way is time-travel.Which, of course, is as easy as taking a stroll in the park. If you could send signals into the past, you could add a negative time delay unit in the circuit (reminds me of reannual plants. Terry Pratchett anyone?). The role of this unit is simple on paper - carry its input unchanged to a time in the past.

With a unit like this plugged into the circuit, as shown in the following figure, what are the implications?

|

| Fig 4. Negative delay!!! |

Clearly we need a diagram for the sake of sanity.

The following figure shows the timeline in the spirit of the previous diagrams. But the negative delay causes interesting differences. We again start monitoring our circuit at \(t_1\) and start with a 1/green signal (iteration 1 in the diagram). Till the gate is done inverting the signal i.e. in the interval from \(t_1\) to \(t_1+\Delta t\), we see the 1 signal in the wire - represented by the green rectangle. At \(t_1+\Delta t\), we measure a 0 - red circle - and this signal feeds back into the gate as the new input in the past i.e. as if 0 was the the input to begin with. The diagram shows the input arc stretching back to just around \(t_1\).

|

| Fig 5. Quantum superposition for the NOT gate |

Beyond this, iteration 2 begins and a similar sequence of steps execute and lead to future iterations. While in Fig 3, the iterations progressed from left-to-right on the time axis, here they all are observed within the same time span, \(t_1\) to \(t_1+\Delta t\) - hence they are shown stacked vertically. The mind-warping catch here is that like before, by construction, all these iterations will happen and hence you will have an infinite number of both 0s and 1s, from different iterations, as legal values of the measurement at \(t_1+\Delta t\). All of them supposed to show up at the same precise instant.

So, what does the device measure?

Say hello to the interesting notion of quantum superposition. The idea is that at the subatomic level entities can be in multiple states simultaneously. Here, the signal, which we assume to be a result of phenomena at the subatomic level, is both a 0 and a 1. Quantum mechanics also tells us that superposition exists until we measure the signal which leads to decoherence or its collapse: this is geek-speak for "one state is forced on the entity when we measure its state".

|

| Fig 6. A cat as promised.Because I used the word "quantum" and I like cats. |

Here's where I ask you to ignore the specifics of what constitutes the "signal" and the "measuring device". Note how I haven't mentioned the signals are electrical in nature. I want you to think of all this time-travel business logically - 1/0 signals maybe represented by positive/negative electrical charges, by light beams of opposite polarization, or something else entirely. As the original article by Moravec points out, what you end up measuring, using the device that suspiciously looks like an ammeter (it is not, its a symbolic measurement device), depends a lot on these specifics.

You good? You aren't looking at me like this right now?

Iterative problem solving

You're probably thinking "All fine, but wasn't this guy schmoozing about problem-solving a while back?" - fret not, we are getting to that bit now.

There are many problems where a solution is arrived at by using an iterative algorithm. We will, in general, represent the problems we are interested in, in the following manner: find the value of \(x\) that minimizes \(f(x)\). Some common steps that such an iterative algorithm take are:

- The solution to the problem is arrived at via multiple iterations. Every iteration makes a new estimate of the solution.

- To start with, before iteration 1, we assume our estimate of the solution is an arbitrary value \(x_0\).

- Iteration \(i\), takes in the current guess \(x_{i-1}\), and uses it to come up with a new guess \(x_i\). In most cases, the new guess is also a better guess i.e. \(f(x_{i-1}) \geq f(x_i)\). Here, \(i=1, 2, 3, ...\)

- The solution to the problem, denoted by \(x^*\), where \(f(x^*)\) is the minimum value for \(f(x)\), is a fixed point of the algorithm: an iteration that consumes \(x^*\) also produces \(x^*\). So, if we find the solution in iteration \(k\), i.e. \(x_k = x^*\), then \(x_k = x_{k+1} = x_{k+2} = x_{k+3}=... x^*\).

Now let's go back to the circuits we have been talking about. What if, instead of the NOT gate, we had a complicated bit of circuitry that performed an iteration for us i.e. it performed Step 3 above? We would get the ball rolling by inputting \(x_0\), this would produce \(x_1\), which would produce \(x_2\), and so on, till we reach \(x^*\). Beyond this point the circuit only produces \(x^*\). The measuring device measures the current value of \(x_i\) in an iteration in this modified setup. Adapting the previous diagram, this is what would happen:

|

| Fig 7. Stabilizing at the fixed point , x* |

Remember we assumed that the device reports the average of everything it sees. Here, it sees a bunch of different values of \(x_i\) to begin with, but once we hit \(x^*\), we only get this value from that point onward; we see an infinitude of \(x^*\) values. What is the average of a set of numbers that has an infinite number \(x^*\), and a finite number of other values? Yes, the device would measure \(x^*\).

Take a while to think about what we've achieved. We start the circuit at \(t_1\) with some arbitrary \(x_0\) as input, and \(\Delta t\) time later the device coolly reads out the final answer \(x^*\). All the iterations that we are supposed to wait for ... are gone. Poof! The iterations are hidden from our sense of time, thanks to our hypothetical ability to travel in time.

Problems with no answers

This is a good place to discuss what happens when an iterative algorithm does not find an answer to a problem. There is no \(x^*\), and hence the algorithm keeps generating a bunch of values ad-infinitum. It is important that our measuring device indicates this state of "no-answer", to help us distinguish from cases where there is an answer. For ex, it is bad if \(x\) can be any number, and the iterative algorithm somehow gets stuck with generating 3 specific values in a round-robin fashion, and our device shows us the average of these 3 numbers. We would be misled into believing this to be the answer.Whether a measuring device can do justice here depends on the implementation. In the specific case of the problem that we see in the next section, we will, fortunately, have a way to recognize these "no-answer" results.

Solving NP complete problems

Finally, with all that elaborate setup, we are ready for the pièce de résistance.. We take our mechanism one step further now and look at how it can be used to solve the NP-complete (NPC) problems. This class of problems is of significant interest in the computer science community.

As an aside, another interesting characteristic of NPC problems are that if you know how to efficiently solve one particular NPC problem, you can adapt that solution to solve any NPC problem. I mention this now since although we are going to pick up one NPC problem for illustration, let's be aware that all NPC problems are taken care of.

A popular example of a NPC problem is the Travelling Salesman Problem (TSP). A salesman is to visit each of \(n\) cities, starting at a particular city, and come back to the starting city; and he must pick a route that is the shortest. Fig 8 shows 4 cities A,B, C and D, and the distances between them.

|

| Fig 8. Travelling Salesman Problem. Source:[1] |

Some possible routes and their lengths are:

- A-B-D-C-A, 108

- A-C-B-D-A, 141

- A-B-C-D-A, 97

It so happens that 97 is the best you can do here. What makes this problem NPC is that the only way to solve this problem is to enumerate all 4! = 24 routes and identify the shortest one. For \(n\) cities this is \(n!\) possibilities (which is your K here), and while it works out for the above example with \(n=4\), the number of possible routes increases really fast with increasing \(n\).

To give you an idea: if I wanted to solve this problem for just the districts within the state of Karnataka, India - which has 30 districts - I will need to look through 30! routes. That is \(2.6 \times 10^{32}\) routes! Add to it the districts in the state of Maharashtra, for a total of 66 districts, we are now looking \(66! = 5 \times 10^{92}\) routes. You know why that is scary? - the number of atoms in the visible universe is \(\sim 10^{81}\). Good luck with finding the best route.

But we are not going to worry because we have time-travel on our side ;)

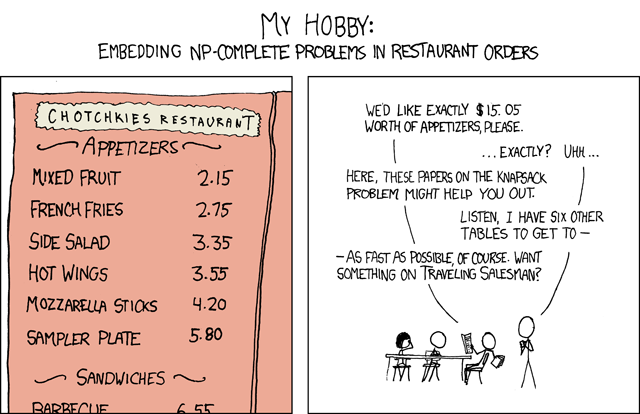

|

| This wouldn't have been, umm, "complete" without a xkcd comic strip. |

To give you an idea: if I wanted to solve this problem for just the districts within the state of Karnataka, India - which has 30 districts - I will need to look through 30! routes. That is \(2.6 \times 10^{32}\) routes! Add to it the districts in the state of Maharashtra, for a total of 66 districts, we are now looking \(66! = 5 \times 10^{92}\) routes. You know why that is scary? - the number of atoms in the visible universe is \(\sim 10^{81}\). Good luck with finding the best route.

But we are not going to worry because we have time-travel on our side ;)

To retrofit our setup from the last section, we think of the solution estimates \(x_i\) as routes, and \(f(x_i)\) as returning the length of a route \(x_i\). We also add a new component to the setup - a knob that can be set to a number, say \(d\). The problem-solving circuitry, which performs the iteration in Step 3, is programmed to work in the following manner in iteration \(i\):

- If for the input route \(x_{i-1}\), we have \(f(x_{i-1}) \leq d\), output the same route i.e. show \(x_{i-1}\) as output.

- Else, generate the next permutation \(x_i\) and return this. Here, the "next" permutation is given by some ordering like the alphabetical ordering.

Clearly, if there is a route possible that has length less than or equal to \(d\), its a fixed point for the algorithm - all iterations beyond the iteration when it first sees such a route, keep returning this route. The measuring device, which now sees an infinitude instances of this route, would only display this route. If such a route does not exist - maybe because its too small a number, for ex \(d=10\) for Fig 8 - then the system becomes "unsteady" in the sense that all permutations sort of show up for the device to measure. If the device has a 16-segment display to show routes, we would probably see "weird" characters in the display due to multiple symbols trying to appear on top of each other.

This is how we solve this problem then: we start by holding the knob at a small value, where we presumably see an "unsteady" state. We keep turning it up slowly, till we see a proper route. The minimum \(d\) for which this happens is our answer. This is easy (and fast) to get right by trial and error.

Finer details:

|

| Mayday ... Mayday ... Mayday! Source: [2] |

Finer details:

- For TSP, you can always start with \(d=0\).

- How large can \(d\) be? There is a easy heuristic to determine this. Pick any route at random and calculate its length, say \(d'\) - this is how large you need \(d\) to ever be. Why? If you have not picked the shortest route, which is likely, then you will find your solution much before hitting \(d'\), when you work your way up from \(d=0\). If, by chance, you have picked the shortest route, then you will find your solution when your knob is exactly at \(d'\). In either case, you don't need to go beyond \(d'\).

- The elephant-in-the-room question: in what increments should you increase \(d\)? This depends on the precision of the numbers in the problem. In the above TSP example, all distances are integers, and hence the shortest distance will also be an integer - hence you could turn up the knob in units of \(1\) when moving from \(d=0\) to \(d=d'\). In the generic case, if your distances have 2 significant digits after the decimal, like 54.25 km, then it makes sense to move up in increments of \(0.01\).

Is this fast? Yes, very! For every position of the knob, you have your answer, or lack of it - the "unsteady" state - in \(\Delta t\) time. In this \(\Delta t\) time, all the circuit is doing is calculating the length of a route, which is a bunch of look-ups and sums; on a modern computer this would take much less than a second. A human would see an answer instantaneously for the knob held at a particular position. - Come to think of it, since you have an upper bound \(d'\) now, you don't have to even move up linearly from \(d=0\) to \(d=d'\). You can do a binary search. This is a significant savings. If the number of decimal numbers you were exploring between two integers is \(p\) (\(p=1\) in the TSP example, and \(p=100\) for the example with 2 significant digits after the decimal), then instead of holding the knob at \(pd'\) positions, you can make do with only \(O(\log pd')\) positions.

- Of course, the "knob" here is symbolic. In a simpler life, you can always have a program turn the metaphorical knob.

[1] By Sdo - self-made using xfig, CC BY-SA 2.5, https://commons.wikimedia.org/w/index.php?curid=715485

[2] https://openclipart.org/detail/179999/16-segment-led

[2] https://openclipart.org/detail/179999/16-segment-led